Computer Vision: How computers see

Computer Vision and Medical Devices

Computer vision plays a big role in many aspects of everyday life. While subtle, it is used in a wide range of consumer, industrial, and medical products, including cell phone cameras, cars (not just the self-driving variety!), assembly lines, and the software a doctor may use to interpret medical scans.

Computer Vision Benefits

The primary benefit of computer vision is how it can boost the speed and efficiency of human users. The human visual cortex contains roughly 4-6 billion neurons, each much more expressive and interconnected than their digital counterparts. The cost of this incredible flexibility in interpretation is speed.

A human’s mean visual reaction time is roughly 250 milliseconds for simple reactive tasks. Any additional required interpretation can increase this time to seconds or minutes per item. Using computer vision to automate simple tasks (and to recognize what items need expert interpretation!) or to guide the user’s attention can allow individual practitioners to do the work of dozens of unassisted users.

Computer Vision Evolution

Computer vision as a practice has evolved massively since its inception. In the early era of the field, people would be required to define the features that would be searched for within an image. Detection of these human-defined “features” allows for automation in well-controlled conditions but lacks flexibility.

Improved computational power enables these features to be “learned” through training a computer vision model using machine learning (ML). This results in more subtle and robust feature detection which improves the reliability of the final model.

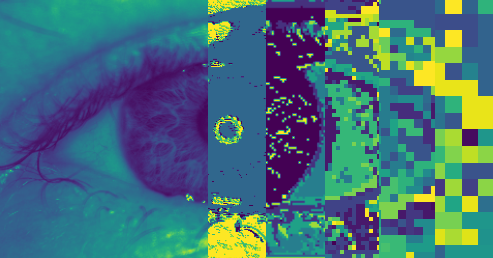

ML-based computer vision models generally harness many layers of “convolutions”, which are essentially sets of filters that it learns during training. These filters are usually much smaller than any of the objects the model is learning to detect. Combining many of them across different scales, an ML-trained computer vision model can reach a surprising level of robustness and accuracy across many different tasks, including image classification and segmentation.

A more recent development is multimodal AI, an improvement on top of language models, which allows the AI to incorporate information from input images and perform assistant-like tasks.

Computer Vision Applications

Classifying images is one of the primary tasks for computer vision models. While simple on its face, classifying images is a highly nuanced task that can span an incredible breadth of complexity depending on both the images and categories.

For image classification, which may be used to flag medical radiographical images, the output of the model is generally one or multiple probabilities, corresponding to each of the classes of image the model must recognize. Throughout the model, different filters are learned that characterize the differences between the classes, which contribute both positively and negatively to the outputs.

Image segmentation can be considered per-pixel image classification instead of class probabilities for the entire image. Each pixel or section of an image is given probabilities, which allow for complex shapes to be isolated from within a picture. In medical applications, this type of computer vision model could be used for margin detection, or to recognize abnormal sections of a medical scan, or even as part of a cell-counting pipeline for laboratory work.

Modern models, such as Meta’s Segment Anything 2, can even perform segmentation across videos by learning more complex features not only across an image’s spatial pixels, but also across the temporal dimension.

Computer Vision Future

While a mature field, computer vision is still constantly evolving and incorporating new techniques and technologies as they emerge. These models utilize the same feature-detection and classification principles as segmentation models. They also include the information with the text inputs to a language model, which can then respond based on information contained in the image inputs. A recent example of this is NVidia’s Describe Anything Model, which seamlessly blends images and language.

The vast majority of ML-enabled medical devices cleared by the FDA are radiological – dealing with medical imaging systems. While we can expect to see other ML techniques further utilized across other medical disciplines, computer vision will remain a mainstay application of ML in the sector.

Image: StarFish Medical

Thor Tronrud, PhD, is a Machine Learning Scientist at StarFish Medical who specializes in the development and application of machine learning tools. Previously an astrophysicist working with magnetohydrodynamic simulations, Thor joined StarFish in 2021 and has applied machine learning techniques to problems including image segmentation, signal analysis, and language processing.

Learn more: 9 ways to use AI in medical device development and a couple of reasons to think twice before engaging with proprietary information.