Statistical Techniques for Streamlining Medical Device Design Verification

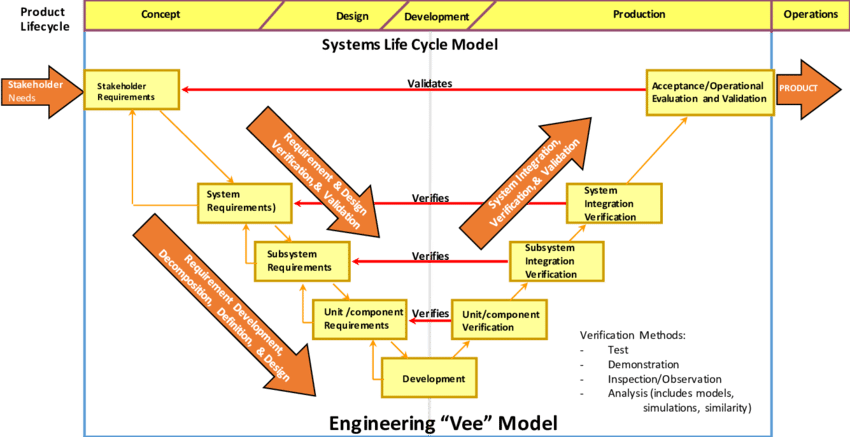

Many techniques can be employed in order to simplify formal verification testing. These techniques expedite the product development process and reduce cost. During verification planning, the required sample size (and therefore the number of devices that need to be built prior to production), can be minimized by the choice of verification method.

We employ strategies to help reduce the verification testing burden by reducing sample sizes and eliminating the need for rigorous protocols wherever possible. Typically, system requirements are verified using one or more of the following methods: inspection, demonstration, analysis, or testing. Medical device design verification by testing can become very onerous if a large sample size is used for all tests.

Regulatory Requirements

Regulatory bodies such as the FDA and ISO require that a certain level of statistical significance is met during the development and testing of medical devices. In order to meet these requirements, namely CFR Title 21 – Part 820 and ISO 13485:2016, valid statistical techniques and a valid statistical rationale must be applied. Statistical Procedures for the Medical Device Industry by Dr. Wayne A. Taylor provides guidance and statistical procedures that satisfy both aforementioned regulations.

Demonstration by Analysis and Worst-Case Testing

Taylor suggests the use of methods such as Demonstration by Analysis and Worst-Case Testing to reduce the typically onerous formal verification process. By using validated predictive models or precisely constructed worst-case conditions, one can verify system requirements with a sample size of only 1. This is because these types of verification methods can evaluate the functionality of the device over the entire specification range, rather than just at the target value(s). This strategy of sample size reduction is beneficial for projects where multiple prototypes are not feasible.

Non-Statistical Requirements

All non-statistical system requirements (e.g. the presence of a design feature) can be verified by either Design Review/Inspection or by Digital/Binary Demonstration. Digital/Binary Demonstration is the performance of a non-variable event or sequence of events, such as testing that the device emits an audible tone when powered on. These methods require a sample size of only 1 because the results are inherently repeatable.

Additionally, these methods do not always require a verification protocol as the explicit PASS/FAIL result can often be referenced directly from a previous review or inspection, provided the earlier record meets the documentation requirements (name, date, signature, revision, etc.) for traceability purposes. Some examples of reference material to support Design Review/Inspection verification include:

- Documentation

- a. System Architecture,

- b. Certificates of Conformance (CoCs), etc.

- Virtual design files

- a. CAD files,

- b. Engineering drawings, etc.

- Inspection logs

- a. First Article Inspection (FAI) logs, etc.

- Test records

- a. Quality Control (QC) test forms, etc.

This methodology can also be applied to the verification of many software requirements. A documented technical review of recorded test results is equivalent to using a Design Review/Inspection to verify system requirements. The following software test methods are routinely performed during the development of the software, and can be referenced during verification to form part of the results: Code Reviews, Static Analyses, Framework Testing, and Non-Statistical Board Testing.

Standard Requirements

Depending on the requirements generation and management strategy used, some requirements may be derived directly from a regulatory standard. Standard requirements, such as those derived from IEC 60601, are formally tested by a third party during certification.

To reduce the amount of testing that must be done internally, include an option in the verification test planning documents to test as per the applicable standard requirements OR reference the latest regulatory test report. This avoids potential repetitive in house testing when design changes are made post-verification, and reduces the verification sample size, if driven by high-risk, standard requirements. These tests (e.g. instability testing as per IEC 60601-1) can be done internally as informal confidence tests on a small sample size, rather than full-scale formal verification tests.

Other Statistical Requirements

Some statistical requirements cannot be verified using the methods outlined above. For these cases, take a source-based, risk categorization approach to triage requirements, allowing reduced sample sizes for lower risk items. Safety related requirements (sourced from a risk analysis) are tested with the highest confidence level and reliability, resulting in the largest sample sizes.

Stakeholder requirements that are not linked to safety (sourced from a stakeholder of the device, e.g. the primary user) are tested with a lower reliability, permitting a reduced sample size. These sample sizes are determined by a statistical sampling plan such as Proportion Nonconforming for attribute or variable data. Sampling plans typically require large sample sizes in order to achieve statistically significant results; therefore, one of the other methods introduced in this blog should be used whenever possible to achieve the minimum sample size.

Conclusion

There are many techniques that can be employed in order to simplify formal verification testing, which expedites the product development process and reduces cost. The most impactful methods are: Demonstration by Analysis, Worst-Case Testing, Design Review/Inspection, and Digital/Binary Demonstration, which can all verify their respective requirements with a sample size as small as 1. Standard Requirement Testing also presents the opportunity for major time and cost savings, by eliminating the need for formal internal verification testing of any redundant tests that will be performed during certification.

Minimizing sample sizes reduces the testing burden while efficiently verifying the device and satisfying all regulatory requirements. This blog is based upon methods used by StarFish Medical. We would be interested to hear other techniques employed by readers in order to simplify medical device design verification.

Image: 161109942 © Gavial31 | Dreamstime.com

Ariana Wilson is an Intermediate Systems Engineer in Product Development at StarFish Medical. She earned her Bachelor of Engineering (BEng) degree in Mechanical Engineering from the University of Victoria, with her fourth year primarily composed of biomedical and fluid mechanics-related courses.

Julian Grove is a Systems Engineer Functional Manager in Product Development at StarFish Medical. He earned his Bachelor of Engineering (BEng) degree in Mechanical Engineering from the University of Victoria and spent several years developing mechanical solutions for medical devices with StarFish while maintaining an eye for the overall system design for complex Enterprise Partnership projects.