What to Consider when Choosing a Medical Device Camera Sensor

Part 2: Incident Irradiance, Exposure, Gain, and Frame Rate

Camera sensors are common components of medical devices. In this blog I will discuss in detail how incident light irradiance, exposure time, gain, and frame rate factor into the use of medical device camera sensors.

The key takeaways are:

- To capture data or images on a medical device camera sensor with the highest possible signal-to-noise ratio (SNR) and dynamic range, the best practice is generally to first increase the amount of light hitting the sensor as much as possible without saturating. Then, if the signal is still not near saturation, to increase the exposure time (if allowed by the specific application).

- Gain can be applied to brighten an image, but in most cases does not improve the SNR and will lower the maximum possible dynamic range of the sensor. However, depending on the details of the sensor’s analog-to-digital converter (ADC) and when in the signal chain gain is applied, the dynamic range in a captured image can sometimes be effectively increased by increasing the gain.

- Frame rate requirements vary greatly depending on the application. Low frame rates are typically acceptable for simple photography, with moderate (24 Hz to 60 Hz) frame rates being appropriate for many straightforward video applications. Applications using real-time feedback can benefit from high video frame rates. In some specialized applications, such as flow cytometry and optical coherence tomography (OCT), even higher frame rates are applicable (kHz or more). High-frame rates can also be useful for improving data quality through averaging.

Incident Irradiance, Exposure Time and Gain

Generally speaking, the most effective way to increase the SNR in a measured signal is to increase the amount of light incident on the camera sensor per unit time per unit area (i.e., the irradiance of light on the sensor). In typical camera lens systems, the irradiance incident on the sensor is determined by the size of the aperture – the opening though which light passes as it moves through the lens and onto the sensor. Increasing the aperture size generally increases the irradiance falling on the sensor.

Greater irradiance on the medical device camera sensor generates a larger signal without increasing the sensor’s read or dark noise (as these originate from the sensor’s electronics[1]). There is therefore no additional detriment to either the SNR or achievable dynamic range of the sensor from these noise sources as the light level is increased. The shot noise is inherently increased with the increase in signal. However, assuming the sensor is not saturated (i.e., it has not reached the signal level where no more signal can be collected), the SNR will nonetheless be increased since the shot noise scales as the square root of the signal counts, and the SNR for a given number of counts is therefore approximately .

A medical device camera sensor’s exposure time setting controls the amount of time the sensor collects light for a single image capture. The longer the exposure time, the greater/brighter the resulting signal (assuming the sensor is not saturated). In addition to increasing the signal, increasing the exposure time increases the dark noise (but not the read noise). The dark noise increases as the square root of the exposure time and increases with increasing temperature. In some special cases, such very long exposures taken at relatively high temperatures, the dark noise may reach a higher magnitude than the read noise, and so become the dominant noise source at low signal levels. However, at most typical camera exposure times the read noise will remain the dominant noise source at low signal levels and the effect of increasing the exposure time on the achievable dynamic range and SNR at low signal levels will be minimal [1]. Furthermore, shot noise will remain the dominant noise source at high signal levels and the effect of increasing the exposure time on the SNR will be similar to that of increasing the incident irradiance on the sensor. Therefore, in most cases, increasing the exposure time is another effective way to increase the SNR of the measured signal without degrading the achievable dynamic range.

Under certain conditions, short exposure times are necessary. For example, imaging fast-moving objects using long exposure times leads to blur due to changes in the object’s position/orientation during the light collection. Blur is unlikely to be desirable in non-artistic applications (although some exceptions exist, such as streak cameras) and so short exposures may be required to avoid it. A short exposure time may also be required to prevent saturation if a high incident irradiance cannot be reduced (though an attenuating optical filter can often be used instead). Finally, if the sensor is used in a video application, the maximum exposure time may be limited by the required frame rate, which will be discussed in the next section.

Finally, the signal output from a medical device camera sensor may be increased by increasing the sensor’s gain, which refers to the amplification of the signal recorded by the sensor. Gain can be applied in a number of non-equivalent ways and the effects of changing the gain can depend on the details of the sensor’s electronics[2], particularly its analog-to-digital converter (ADC).

After photoelectrons are accumulated on a sensor, read out and possibly amplified, the ADC measures the accumulated charge and converts it to a numerical digital output. The number of possible digital output levels (or analog-digital units: ADUs) is determined by the ADC’s bit depth. An ADC with a bit depth of X can output 2X distinct signal levels. For a given sensor and gain, there is a certain fixed number of photoelectrons required to move from one signal level to the next higher one.

In some cases, gain may be applied after the conversion to digital, which may simply mean that the numerical digital output from each of the sensor channels is multiplied by some factor (sometimes called “digital gain”). In that case, the SNR is not increased as the noise and signal are both multiplied by the same factor (supposing the increase in gain does not increase the signal above the maximum ADU, in which case information in high signal areas of the captured image would be lost). Furthermore, the maximum possible dynamic range will be decreased, as the noise floor will be increased and the maximum signal level will be decreased (for example, if a captured signal has a maximum value of 256 after conversion to digital and 2× digital gain is applied, any digital signal with a value initially over 128 will be saturated). For dark images, it may nonetheless be necessary to apply digital gain to brighten the image to a level that is practical for a human to look at.

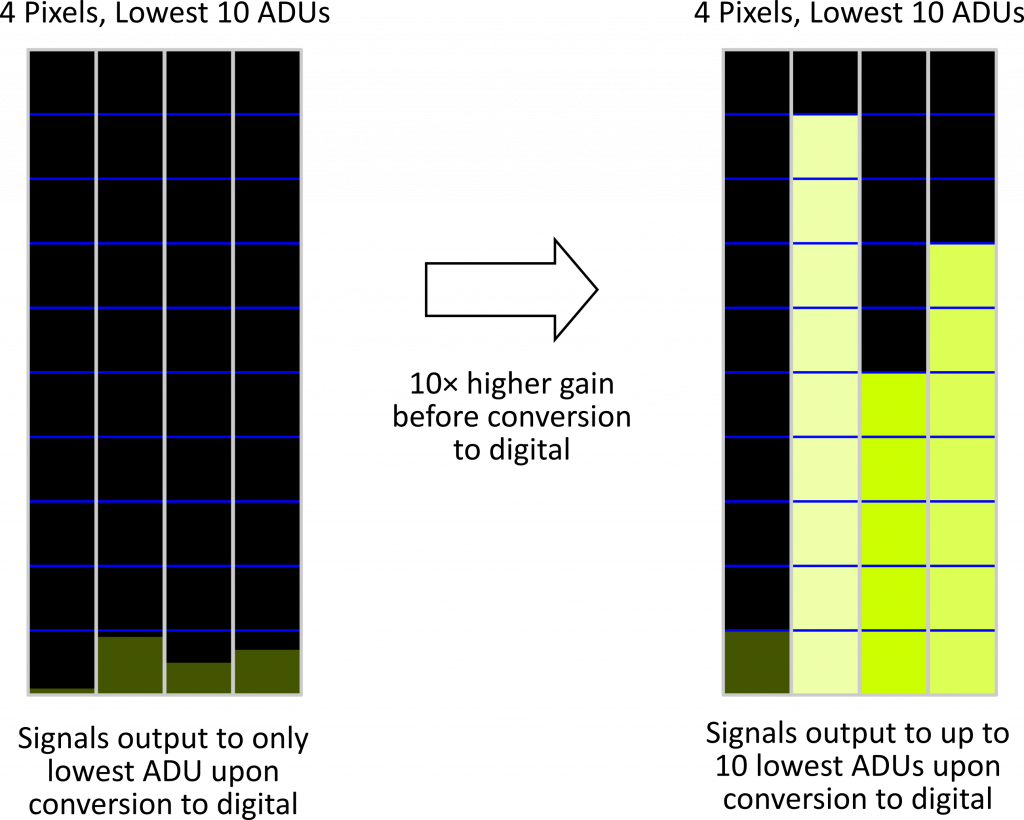

In some sensors, gain can be applied before the conversion to digital. Gain applied this way usually increases both the signal and noise by a similar factor, and so the effects on the SNR and maximum dynamic range are similar to those of digital gain. However, if the number of photoelectrons per ADU at a given gain setting is greater than 1, applying more gain can “spread out” the signal over more ADUs by effectively reducing the number of photoelectrons per ADU [2]. Assuming information isn’t lost to saturation after increasing the gain, this method allows for a greater number of light levels to be distinguished in the digital output. In some cases, particularly when signal levels are low, increasing the gain can increase the dynamic range in the captured image (the ratio of the highest to lowest measured signals; not to be confused with the maximum dynamic range of the sensor). To take a concrete example, if we have a sensor and gain such that there are 100 photoelectrons per ADU, and we capture an image such that various signal counts of fewer than 100 photoelectrons are captured on the sensor, all signals will produce the same output from the ADC the maximum possible dynamic range of the output digital image will be 1:1 (i.e., it will have no dynamic range). However, if a gain of 10× is applied before the conversion to digital, the signal will instead be output into up to 10 ADUs thus allowing more measured light levels to be distinguished. Furthermore, supposing the dark and read noise amount to less than 10 photoelectrons prior to increasing the gain, the maximum dynamic range in the output digital image will be 10:1. This method does not apply to sensors/gains that take one or fewer photoelectrons per ADU, as each additional photoelectron already occupies a different ADU upon conversion to digital in that case.

In general, the best practice to optimize the SNR and dynamic range in a measured signal for a given sensor is to first increase the incident irradiance as much as possible without saturating. Next, if necessary, increase the exposure time such that the collected signal is near saturation (to the extent that the specific application allows). If the signal is still not near saturation, gain can then be applied to brighten a captured image or to increase the number of gradations in the output digital signal (assuming gain is applied before the ADC and the number of photoelectrons per ADU is greater than 1 before increasing the gain). However, adding gain reduces the maximum possible dynamic range of a sensor and does not improve the SNR in most cases. However, in cases such as that described immediately above, the dynamic range in a captured image can be effectively improved by increasing gain before the ADU.

Frame Rate

Another consideration related image capture time is the sensor’s frame rate. This term refers to the rate (in Hertz, Hz, or equivalently, frames per second, fps) at which a sensor can collect separate images. Requirements for frame rate can vary greatly depending on the application. For capturing still images, a low frame rate is often acceptable, provided the time to output an image does not cause excessive waiting after image capture. On the other hand, if you wish to record a series of images of rapidly changing scene, a high frame rate may be required.

Typical frame rates for video range from 24 Hz to 60 Hz. (24 Hz is the frame rate at which films have traditionally been shot and displayed, and many continue to be shot at this frame rate today.) Video displayed at a 60 Hz frame rate will result in the perception of smooth motion for most people[1].

In cases where a real-time video feed is being used, a low frame rate increases perceptible latency in the displayed signal. Supposing video is being captured and displayed at 30 Hz there will be at least a 1/30 s delay between each image: motion during this delay time will not be seen on the screen. This delay is significant enough to be perceptible and is not optimal if real-time feedback is being used, such as if the camera is being used to monitor precise surgical operations. Ideally, video used for such a purpose should be captured and displayed at a sufficiently high rate such that the motion is perceived as smooth and instantaneously responsive to the end user’s actions.

Higher-than-typical frame rates can be used capture very fast changes in an imaged object or to rapidly capture data. Certain medical applications, such as some implementations of flow cytometry and optical coherence tomography (OCT), can benefit from such high frame rates. In flow cytometry, which is a method of investigating the characteristics of cells, fast camera sensors can enable hundreds of cells to be imaged and characterized in less than a second. In OCT, fast medical device camera sensors can be used to enable multiple 3D volumes (of biological tissue, for example) to be imaged in a single second (imaging such 3D volumes using camera-sensor-based OCT requires the collection of many camera frames). Under some conditions, rapid data collection can also be useful for improving data quality through averaging, without unacceptably increasing the data collection time.

Cameras capable of high frame-capture rates have become more commonplace in recent years. A number of sensors used in smartphones today are capable of capturing video at up to 960 Hz [3], which until recently would have only been possible using specialized “high-speed” cameras. The fastest medical device camera sensors today can reach frame rates well into the kHz regime (for example, some line camera sensors can reach over 100 kHz frame rates). For technical reasons, the frame rate of camera sensors is sometimes tied to the resolution at which the image is taken, with the maximum frame rate only being achievable when a reduced resolution is used (either by combining the signal from multiple pixels – i.e. binning – or sampling only a subset of the total pixels).

Conclusion

This blog series discusses various things to consider when choosing a medical device camera sensor.

In this blog I have discussed the factors that should be considered to capture images/signals with the highest quality possible. I discussed the incident light irradiance, exposure time and sensor gain and how they affect the signal-to-noise ratio (SNR) and/or dynamic range. I have also discussed the impact of frame rate – the rate at which images can be captured and subsequently displayed.

The first entry in this blog series gave an overview of signal and noise sources and associated figures of merit, and discussed the impact of the sensor resolution and pixel size. The next blog will focus on the available medical device camera sensor types and technologies.

[1] Dark noise, shot noise and read noise were defined in the previous blog in this series.

[2] In consumer cameras, gain is often related to the ISO setting which, roughly speaking, is used to approximately match the brightness of an output image for a given light level to that of old film photosensitivity standards (i.e. film speed). However, different consumer cameras implement ISO settings in different ways that are not all equivalent to increasing gain [4].

[3] Although 60 Hz video will be perceived as smooth motion to most people, some people can nonetheless see a further enhancement in perceived smoothness at even higher frame rates. Displays with frame rates higher than 60 Hz (such as 144 Hz displays) have recently become popular among some users, particularly PC gamers.

References

| [1] | Thorlabs, “Camera Noise and Temperature Tutorial,” [Online]. Available: https://www.thorlabs.com/newgrouppage9.cfm?objectgroup_id=10773. [Accessed 9 April 2021]. |

| [2] | Edmund Optics, “Imaging Electronics 101: Basics of Digital Camera Settings for Improved Imaging Results,” [Online]. Available: https://www.edmundoptics.com/knowledge-center/application-notes/imaging/basics-of-digital-camera-settings-for-improved-imaging-results/. [Accessed 30 April 2021]. |

| [3] | H. Simons, “Who actually has real 960fps super slow-motion recording?,” Android Authority, 13 July 2019. [Online]. Available: https://www.androidauthority.com/real-960fps-super-slow-motion-999639/. [Accessed 14 December 2020]. |

| [4] | R. Butler, “You probably don’t know what ISO means – and that’s a problem,” Digital Photography Review, 6 August 2018. [Online]. Available: https://www.dpreview.com/articles/8924544559/you-probably-don-t-know-what-iso-means-and-that-s-a-problem. [Accessed 23 April 2021]. |

Image: Can Stock Photo / Voyagerix

Ryan Field is an Optical Engineer at StarFish Medical. Ryan holds a PhD in Physics from the University of Toronto. As a post doctoral fellow, he worked on the development of high-power picosecond infrared laser systems for surgical applications as well as a spectrometer from home materials.