5 Tips for medical device cyber security

I have just returned from a meeting in Washington where I was fortunate enough to be invited to attend a working group on Cyber Security Standard for Connected Diabetes Devices. Dr. David Klonoff of the Diabetes Technology Society is putting together a consensus standard for cyber security. As developers of medical devices, how do we limit opportunities for attacks to happen? This blog talks about the basics of cyber security as it relates to medical devices, and gives 5 tips for developing hack resistant medical devices.

Cyber security is not new. The topic was covered by Hollywood in the 1983 classic, War-Games. Teenaged Matthew Broderick hacked into a Department of Defense super-computer and almost started World War III with the Soviets. Hacking has existed in the form of Phreaking since the late 1950’s, whereby tech savvy nerds– what we now call ‘Hackers’– began to devise workarounds to obtain long distance calls for free, bypassing complicated equipment with a toy whistle given free in a cereal box.

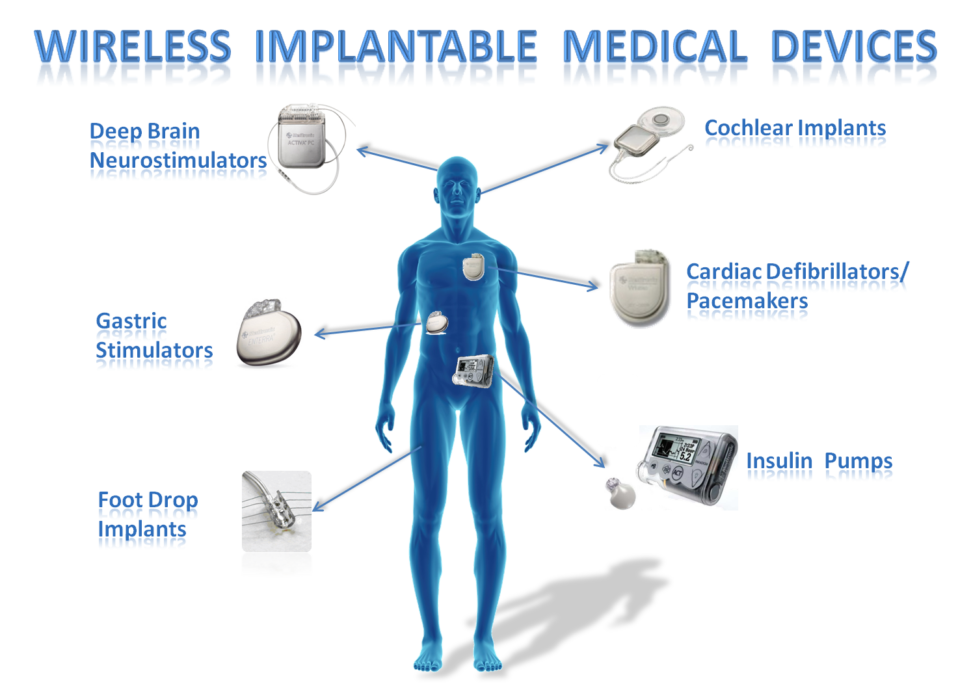

How does this relate to medical devices? One dimension is the need to safeguard any patient data which may be present (HIPAA for example). Another dimension is protecting the function of the medical device. Smart technology has opened up previously inaccessible technology for potentially malicious purposes. Essentially, any technology with the word ‘Smart’ seems to mean it is connected to the internet, hence vulnerable to remote attack.

Hollywood highlighted this in the December 2012 broadcast of Homeland, where an evil grinning hacker remotely assassinated the Vice President of the United States by instigating a pacemaker induced heart attack. Although the public were shocked, the writers of Homeland were extrapolating from information which was disseminated 4 years earlier. A few years later, a talented ‘White Hat’ hacker (i.e. not malicious), New Zealander Barnaby Jack, demonstrated this was actually possible (albeit at closer range), and a few years later also demonstrated that Insulin pumps can be hacked to deliver potentially fatal doses. Barnaby died less than a year later from a drug overdose.

Tips

I have written before that there are lifecycle standards for software development. Here, the software should be designed with requirements, architecture and subsequent specs which are verified, and the whole system is validated against the requirements. I mentioned that it is very difficult to bolt on the regulatory documentation at the end. In the same way, bolting on security at the end is also very difficult – this should be considered from the outset.

Here are my 5 tips for medical device cyber security:

Safety is not Security

Developers of medical devices are familiar with the concept of devices which are either fail safe for low risk devices, or fail-over for devices which must maintain a certain level of performance. ISO14971 defines a risk management process that qualifies risk, allowing identification for when mitigations must be implemented, and then subsequently verified. For devices which must fail-over, the IEC60601 family of standards defines the essential performance which must be maintained for a large variety of medical device types.

An example which shows the difference between safety and security is the reverse park assist feature found on newer cars – those which turn the steering wheel for you as you back into a parking spot. A risk analysis might identify accidental activation of the reverse park feature while travelling at highway speeds, and the mitigation would be to disable the feature while travelling at speeds greater than 5km/h.

From a security perspective, this is not secure because a hacker who has compromised a car’s (Controller Area Network) CAN bus system could issue a command to indicate the speed is 3km/h and then sequentially issue a command to engage the reverse park feature. Once the system is compromised, it is trivial to issue multiple commands. The security risk mitigation here could be architecture related, for example dual CAN bus backbones with different allowed commands on each bus, which would require an attacker to compromise both CAN bus backbones.

Closer to home, the insulin pump security mitigation could be ensure user confirmation is required for remote controlled administration of large doses, and/or limiting the maximum number of doses in a certain time period.

Read the FDA Guidance

The FDA released a guidance document titled ‘Content of Premarket submissions for management of cybersecurity in Medical Devices’, issued in October 2014.

This guidance document describes, at a minimum, a risk assessment approach to cybersecurity-

1) Identification of threats, assets (something we value) and vulnerabilities

2) Assessment of the impact of threats and vulnerabilities being exploited

3) Determination of risk level and suitable mitigation strategies

4) Assessment of residual risk and risk acceptance criteria

This risk based approach should be familiar to medical device developers since it mirrors ISO14971, and it would also be good practice to verify any mitigations that are not part of the architecture are effective, where possible and practical.

The guidance document also describes a framework for cybersecurity core activities, referring to “Framework for improving critical infrastructure cybersecurity”, V1.1 published by the National Institute of Standards in 2018. The features of the framework are discussed below:

1) Identify – as above evaluate the possible attack vectors early in your system architecture.

2) Protect – Where possible, mitigate by modifying the system architecture. For example, an internal debug port as opposed to accessible debug port, discussed below. For those attack vectors which cannot be protected via architecture changes, implement mitigations which will make it more difficult for an attacker to compromise the system, such as maximum number of login retries before timed lockout. Consider how to protect data – both in-transit encryption and data at rest encryption. Also consider layered approaches – limited access, multi-factor authentication and varying user privileges or roles.

3) Detect – consider implementing features which will allow hacking attempts to be detected, such as login audit trails. Even simple devices can have a tamper detect or case open switch detect.

4) Respond – As discussed above in 60601 and essential performance, implement features which will continue to provide critical functionality

5) Recover – provide methods for restoration of normal device operation by an authenticated user with appropriate privileges. One option is the ‘Factory Reset’ button.

Be Pragmatic

Engineers usually make pragmatic decisions when developing a system architecture, but a recognition of security issues as above can make this much more powerful. This works best when not presented as a regulatory issue, but as a technical challenge – challenge your team to hack their-own products.

For example, Bluetooth LE includes an encryption feature which is relatively well known, and a lesser well known Privacy feature which changes a device address over time. Using a chipset which supports the Privacy feature would reduce an attacker’s ability to sniff data.

Another example is root access. While it might be convenient to have a development debug port on the back of the machine, this is a prime attack vector. Hence, debug ports should at a minimum be disabled in production firmware and covered/hidden, but preferably should not be exposed out of the enclosure or even populated. Root access on a range of android phones has been obtained by accessing a ‘hidden’ debug port which was brought out on the USB port via a USB multiplexor.

Backdoor passwords – a long time ago an Internet computer was sold very cheaply. This device employed a proprietary OS which dialed (dialup shows just how long ago) a certain subscription-based internet provider, and popup ads were displayed while you surfed. The device was a regular PC with a hardcoded bios password – enter the password, install Windows 95 and you had an at-the-time medium performance PC for the price of a family trip to the cinema. This shows that hard coded passwords should never be used, as they will almost certainly be discovered.

Firmware updates are a popular attack vector. Ensure only signed, authorized firmware can be installed, and only authenticated users with appropriate privileges can install firmware updates. Is it a good idea to enable over the air firmware updates, or should only hardwired connection permit an update? Also consider software roll-backs – do you want to allow old, buggy software to be installed over newer software?

Regulatory Compliance

Assuming your device qualifies for a 510(k), the FDA does not need a 510(k) resubmission for software updates which remove security vulnerabilities, provided the intended use, features and performance remain the same as the original submission. You should, of course, be following your software development Design Controls process required for design changes by CFR 820.30(i), even for FDA Class I devices. You should be reviewing, verifying and validating as you go, with appropriate levels of documentation and notes to file justifying the actions.

In addition, there is an argument for saying that it is a manufacturer’s responsibility to be vigilant and make security updates, as code with known vulnerabilities could be considered putrid, which is forbidden according to the Food, Drug &Cosmetic Act 21 CFR 351(1)(1). Code rot has regulatory consequences.

Security Assurance

The approach described above constitutes the bare minimum for how a developer might manage cyber security when developing medical devices. However, the de-factor cyber security standard is ISO15408-1 (2009) Information technology — Security techniques — Evaluation criteria for IT security — Part 1: Introduction and general model, which introduces the Common Criteria framework. Like product requirement, the Common Criteria allows manufacturers to specify their security and assurance requirements using Protection Profiles (PPs), permitting testing laboratories to evaluate and determine the security claims have been met. This may have a marketing advantage – which would you buy, a medical device that has been certified security tested or one that has not? Developing Protection Profiles and assurance testing documentation is left as a topic for another blog.

Conclusion

Beyond curiosity, there must be a net benefit for sustained, commercial level hacking activity. Hence, you should carefully consider your device risk classification, attack vectors and risk of harm to patients when evaluating the impact of hacking on your medical device.

There are many more would-be hackers than engineers on your development team. Not all succeed. Hackers can make many mistakes while hacking your device, or simply be mediocre and unsuccessful.

Vincent Crabtree, PhD is a frequent contributor to StarFish Medical blogs and a leader in Diabetes Management Device Technology R&D.

Image: MIT Computer Science & Artificial Intelligence Lab